Transpose Convolution Explained

Source: Primary

Read More

Upsampling vs Transpose Convolution: https://stackoverflow.com/questions/48226783/

Upsampling: [Not trainable]

In keras, Upsampling, provided you use tensorflow backend, what actually happens is keras calls tensorflow resize_images function, which essentially is an interpolation and not trainable.

Like resizing.

For Caffe: https://stackoverflow.com/questions/40872914/

By specifying num_output: {{C}} group: {{C}}, it behaves as channel-wise convolution. The filter shape of this deconvolution layer will be (C, 1, K, K) where K is kernel_size, and this filler will set a (K, K) interpolation kernel for every channel of the filter identically. The resulting shape of the top feature map will be (B, C, factor * H, factor * W). Note that the learning rate and the weight decay are set to 0 in order to keep coefficient values of bilinear interpolation unchanged during training.

Transpose Convolution: [Trainable]

Transposed convolution, calls tensorflow conv2d_transpose function and it has the kernel and is trainable.

Convolution:

Say we have an input of 5x5 and we do convolution with 3x3 with stride 2x2 and padding - 'valid'

(padding = 0).

Then,

output_size = [ ( input_size - filter_size + 2 * padding ) / stride ] + 1

https://ezyang.github.io/convolution-visualizer/index.html

or, output_size = ( I + P_left + P_right - D*(F-1) -1) / S + 1

I = Input Size, F = Filter Size, D = Dilation, S = Stride, P = Padding

https://github.com/vlfeat/matconvnet/issues/1010

For above example,

output_size = [ ( 5 - 3 + 2 * 0 ) / 2 ] + 1 = 2

Transpose Convolution:

Let's say we want to do the reverse,

Go from 2x2 to 5x5

In general the output[of transpose convolution] size can be calculated as,

Transpose_Size = input_size * stride [For SAME padding, padding not equal to zero]

Transpose_Size = input_size * stride + max(filter_size - stride, 0)

For above example,

transpose_size = 2 * 2 + max(3-2, 0) = 4 + 1 = 5

2. Multiply all the values of the filter with the first value of the input (top-left).

3. Put the output of filter in the top-left corner of the Transposed Conv matrix.

4. Multiply all the values of the filter with the second value of the input and put the result in Transposed Conv matrix with the specified Stride.

5. If there are overlapping values, add them.

6. And repeat the process.

Multiply each pixel by the filter, so if the filter is 3x3, for 1 pixel in input we get 3x3 pixels in output and then we put the output with strides or sth and we sum in the overlaps.

Other names:

- Deconvolution (Bad)

- Upconvoltion

- Fractionally strided convolution

- Backward strided convolution.

Source: Primary

Read More

Upsampling vs Transpose Convolution: https://stackoverflow.com/questions/48226783/

Upsampling: [Not trainable]

In keras, Upsampling, provided you use tensorflow backend, what actually happens is keras calls tensorflow resize_images function, which essentially is an interpolation and not trainable.

Like resizing.

For Caffe: https://stackoverflow.com/questions/40872914/

layer {

name: "upsample", type: "Deconvolution"

bottom: "{{bottom_name}}" top: "{{top_name}}"

convolution_param {

kernel_size: {{2 * factor - factor % 2}} stride: {{factor}}

num_output: {{C}} group: {{C}}

pad: {{ceil((factor - 1) / 2.)}}

weight_filler: { type: "bilinear" } bias_term: false

}

param { lr_mult: 0 decay_mult: 0 }

}

Transpose Convolution: [Trainable]

Transposed convolution, calls tensorflow conv2d_transpose function and it has the kernel and is trainable.

Convolution:

Say we have an input of 5x5 and we do convolution with 3x3 with stride 2x2 and padding - 'valid'

(padding = 0).

Then,

output_size = [ ( input_size - filter_size + 2 * padding ) / stride ] + 1

https://ezyang.github.io/convolution-visualizer/index.html

or, output_size = ( I + P_left + P_right - D*(F-1) -1) / S + 1

I = Input Size, F = Filter Size, D = Dilation, S = Stride, P = Padding

https://github.com/vlfeat/matconvnet/issues/1010

For above example,

output_size = [ ( 5 - 3 + 2 * 0 ) / 2 ] + 1 = 2

Transpose Convolution:

Let's say we want to do the reverse,

Go from 2x2 to 5x5

In general the output[of transpose convolution] size can be calculated as,

Transpose_Size = input_size * stride [For SAME padding, padding not equal to zero]

Transpose_Size = input_size * stride + max(filter_size - stride, 0)

For above example,

transpose_size = 2 * 2 + max(3-2, 0) = 4 + 1 = 5

Process:

input = [ a b ]

[ c d ]

filter = [ w1 w2 w3 ]

[ w4 w5 w6 ]

[ w7 w8 w9 ]

output = [ a*w1 a*w2 a*w3+b*w1 b*w2 b*w3 ]

[ a*w4 a*w5 a*w6+b*w4 b*w5 b*w6 ]

[ a*w7+c*w1 a*w8+c*w2 a*w9+b*w7+c*w3 b*w8+d*w1 b*w9+d*w2 ]

[ c*w4 c*w5 c*w6+d*w4 d*w5 d*w6 ]

[ c*w7 c*w8 c*w9+d*w7 d*w8 d*w9 ]

2. Multiply all the values of the filter with the first value of the input (top-left).

3. Put the output of filter in the top-left corner of the Transposed Conv matrix.

4. Multiply all the values of the filter with the second value of the input and put the result in Transposed Conv matrix with the specified Stride.

5. If there are overlapping values, add them.

6. And repeat the process.

Multiply each pixel by the filter, so if the filter is 3x3, for 1 pixel in input we get 3x3 pixels in output and then we put the output with strides or sth and we sum in the overlaps.

Other names:

- Deconvolution (Bad)

- Upconvoltion

- Fractionally strided convolution

- Backward strided convolution.

Example with Code:

input = [ 1 2 ]

[ 3 4 ]

filter = [ 1 1 1 ]

[ 1 1 1 ]

[ 1 1 1 ]

output = [ 1 1 1+2 2 2 ]

[ 1 1 1+2 2 2 ]

[ 1+3 1+3 1+2+3+4 2+4 2+4 ]

[ 3 3 3+4 4 4 ]

[ 3 3 3+4 4 4 ]

= [ 1 1 3 2 2 ]

[ 1 1 3 2 2 ]

[ 4 4 10 6 6 ]

[ 3 3 7 4 4 ]

[ 3 3 7 4 4 ]

import tensorflow as tf

import numpy as np

X = tf.placeholder(tf.float32, [None,4], name="X_placeholder") #2x2

X_mat = tf.reshape(X, shape=[-1,2,2,1])

value = [[1,1,1],[1,1,1],[1,1,1]]

w_init = tf.constant_initializer(value) # tf.truncated_normal_initializer(stddev=0.001)

kernel1 = tf.get_variable('kernel1', [3,3,X_mat.shape[-1],1],initializer=w_init)

stride1 = [1,2,2,1]

output_shape1 = [1,5,5,1]

padding1 = 'VALID'

t_conv1 = tf.nn.conv2d_transpose(X_mat, filter=kernel1, strides=stride1, padding=padding1, output_shape=output_shape1)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

X_1 = np.asarray([[1,2,3,4]], np.float32)

t_conv1,X_mat = sess.run([t_conv1, X_mat], feed_dict={X: X_1})

print('t_conv1 : ', t_conv1, '\n\n\nX mat: ', X_mat)

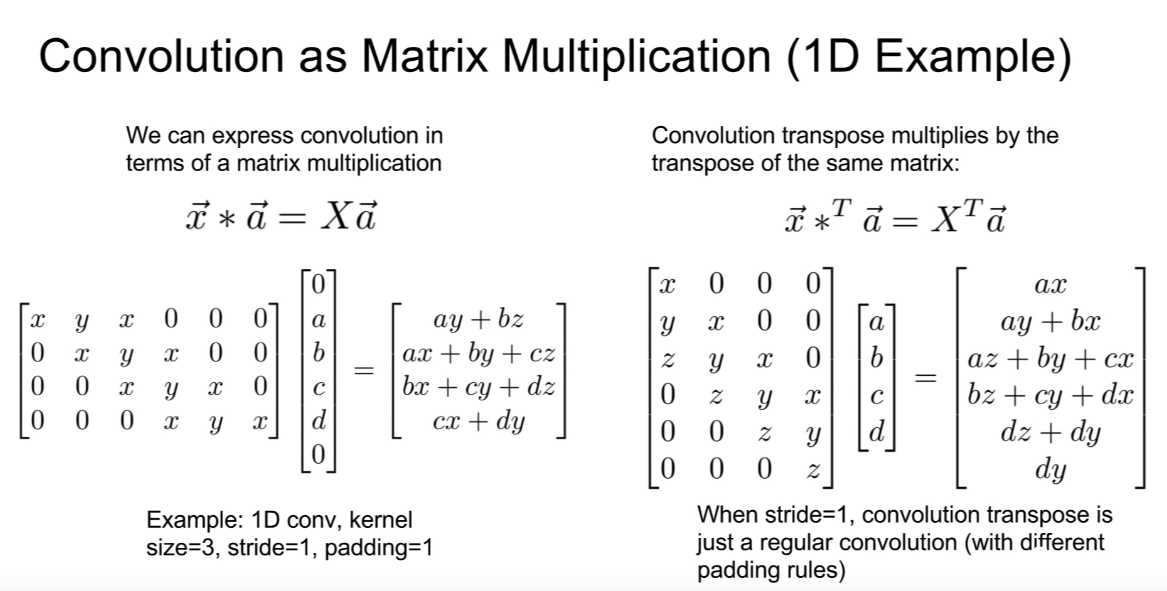

Why Transpose?

import sys

import caffe

from caffe import layers

import numpy as np

caffe.set_mode_cpu()

#Defining Network

n = caffe.NetSpec()

n.data = layers.Input(input_param={'shape':{'dim':[1,1,2,2]}})

n.deconv = layers.Deconvolution(n.data,

convolution_param=dict(num_output=1, bias_term=False, pad=0,

kernel_size=3, group=1, stride=2,

weight_filler=dict(type='constant',value=1)))

#Saving Network Prototxt to Load later

with open('mydeconvnet.prototxt', 'w') as f:

f.write(str(n.to_proto()))

#Load net and predict

net = caffe.Net('mydeconvnet.prototxt', caffe.TEST)

im = np.asarray([[1,2,3,4]], np.float32)

im_input = np.reshape(im, (-1,1,2,2))

print(im_input.shape)

net.blobs['data'].reshape(*im_input.shape)

net.blobs['data'].data[...] = im_input

[print(k, v.data.shape) for k, v in net.blobs.items()]

net.forward()

print(net.blobs['deconv'].data)

# Sources:

# https://stackoverflow.com/questions/40986009/how-to-programmatically-generate-deploy-txt-for-caffe-in-python

# http://www.tarekallamjr.com/blog/posts/Programatically-define-net-with-PyCaffe/

# https://github.com/shelhamer/fcn.berkeleyvision.org/blob/master/voc-fcn32s/net.py#L58-L61

# https://programtalk.com/vs2/python/4583/dilation/network.py/

# https://github.com/BVLC/caffe/issues/4052

# https://www.jianshu.com/p/e05d1b210fcb

# http://christopher5106.github.io/deep/learning/2015/09/04/Deep-learning-tutorial-on-Caffe-Technology.html