Principal Component Analysis (PCA)

https://www.youtube.com/watch?v=TJdH6rPA-TI

- Minimizes the error and maximizes the spread (variance) - finds that dimension

- Principal components are orthogonal

- PCA is like projecting data to new components

Performed by carrying out eigen-decomposition of the covariance matrix. - Mathematically

PCA is a variance-maximizing technique that projects original data onto a direction that maximizes variance.

- Performs linear mapping of original data to a lower-dimensional space such that the variance of data in the low-dimensional representation is maximized.

A covariance matrix is a square matrix giving the covariance between each pair of elements of a given random vector.

https://www.geeksforgeeks.org/mathematics-covariance-and-correlation/

Covariance:

joint variability of two random variables - gives linear relationship between variables, ranges from -infinity to +infinity

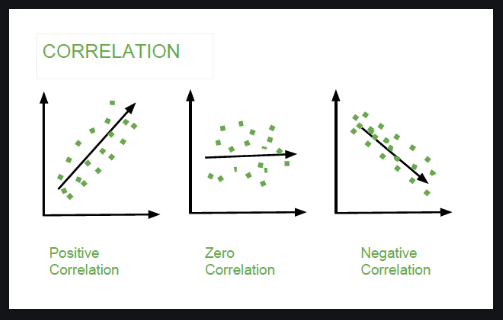

Correlation:

between -1 to +1